Real-World Learning

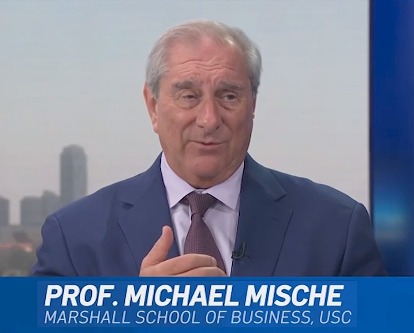

Over its 100-year history, Marshall has forged deep ties with dynamic companies and business leaders, not only in southern California, but across the country and all around the world, creating unlimited opportunities for its students across a broad range of industries.

USC Marshall integrates its core curriculum with a spectrum of hands-on learning opportunities so that our students can take what they learn in the classroom and apply it to the real world, in real time. In the process, they learn more about themselves, the dynamics of teams, and the broader societal context in which business operates. Learning by doing prepares Marshall graduates to be immediately ready to thrive and excel in the workplace.