How to Talk with Your Hands

New research led by USC Marshall’s Luca Cascio Rizzo reveals that gestures that visually represent what speakers say help audiences perceive them as more competent and persuasive.

AI Bottlenecks: A Q&A with Georgios Petropoulos on Obstacles to an Economic Boom

AI Bottlenecks: A Q&A with Georgios Petropoulos on Obstacles to an Economic Boom

Assistant Professor Georgios Petropoulos discusses his research on artificial intelligence bottlenecks and the factors preventing the technology from catalyzing an economic surge.

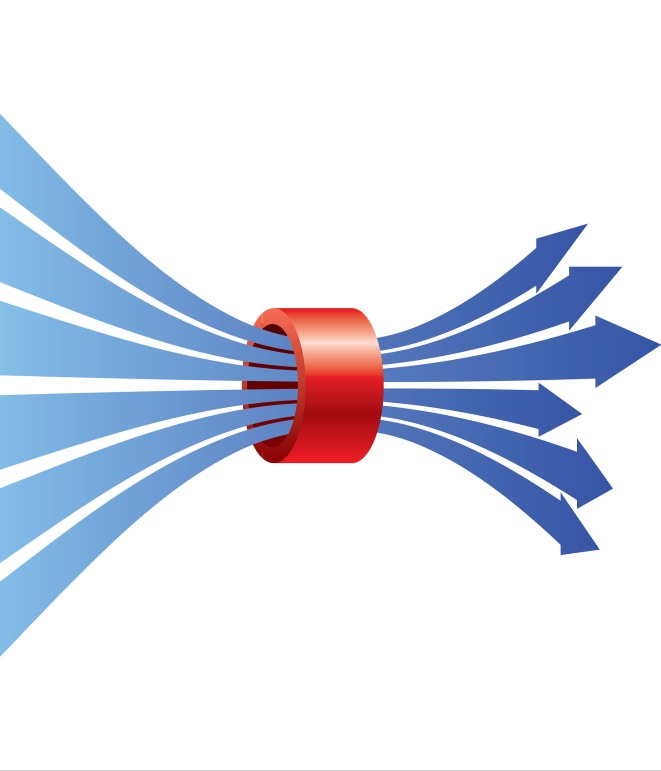

[iStock Photo]

Artificial intelligence (AI) is permeating throughout the business world, from communications to data analysis to financial planning. So why hasn’t the hyper-efficient technology resulted in a surge in productivity and economic growth?

In his paper, “Artificial Intelligence: Increasing Labour Productivity in a Responsible Way,” Georgios Petropoulos, assistant professor of data sciences and operations, and his co-author Mamta Kupar (Accenture) discusses the AI productivity paradox: a slowdown in productivity despite advancements in digital technology. The assistant professor also explores the obstacles, or bottlenecks, preventing a potential economic boom.

In the following conversation, Petropoulos defines these bottlenecks and proposes solutions to catalyze growth, collaboration, and equal opportunity in the world of AI.

Interviewer: Could you explain the concept of AI bottlenecks?

Georgios Petropoulos: When we look at productivity statistics to see how AI affects the economy, using standard methodologies, we see that it did not have much effect so far. Some colleagues have named it as an “AI productivity paradox.”

My research is about how AI can help society, how it can help the economy, and how we can get more benefits from AI. AI bottlenecks refer to important obstacles in the wide diffusion of AI technologies. If we address the AI bottlenecks, AI will be adopted widely across many more corporations and only then will we be able to see the aggregate positive impact that can significantly improve our lives and our living standards.

What are those bottlenecks?

GP: They are obstacles related to key inputs that make AI work. There are three key inputs that I’m studying in particular:

Let’s start with the first bottleneck — AI talent. Basically, this is translated as skill shortages. If we look at job postings data over the period 2010–2019, more than 26% of AI talent is taken by just 10 firms. We have an AI talent shortage for the rest of the firms. In order to adopt AI, we need to achieve a better distribution of AI talents. We need to remove the skill shortages and allow firms to be able to find the talent they need in order to innovate.

How do we achieve that? We need to put a multidimensional policy approach into play. We need to talk about education and how we can reform education. We can talk about training programs which help people to readjust and build on the already acquired skills and bring more context in the AI era. We can talk about labor mobility, how easy it is to move from one country to another or from one company to another. Of course, immigration policies can also be relevant here to guarantee that US firms, especially small firms, can also bring AI talent from abroad.

Then there’s the second bottleneck, which is data concentration. Many of the big firms have organized platform ecosystems where the big tech firm has a central role in collecting information from users. By being in the center, they have a great information advantage, which they also can monetize in different ways.

This presents a big question: Should these firms keep their data for themselves? Who owns this data? One idea that we put forward has to do with developing data sharing mechanisms so that these big firms share this information with smaller firms that participate in this ecosystem. By receiving data that is relevant to their business focus and the value proposition, they can use this information to train their AI systems and make them better in order to deliver better services and products.

If we create markets for data, of course we need to fully respect the privacy rules and principles. We should empower the users to have more control over the personal data. Instead of control by the big tech platforms, it is the user that should decide how to use their data and whether they would like to share their data with some other firm than the big platform.

The third bottleneck refers to the investment and infrastructure cost should take a more traditional economic approach. We need to rely on insights from the economics of innovation to incentivize firms to undertake larger cost investments in order to build the proper infrastructure and invest in the computing power necessary for their AI systems to run and produce efficient results. The co-investment model that has traditionally applied in telecommunication networks can also be relevant here for how we can make it work in this context.

How do you address these bottlenecks? Through policy? Regulation?

GP: We need to think carefully about the policy tools that are relevant here. One important aspect of AI is that it is a general-purpose technology which means it has a pervasive impact to many different industries, to many different contexts. That means the policy tools should be multidimensional.

First of all, we need to understand what is the key challenge or problem and then to make sure we address it in a sufficient way. On one hand, the firms that are front runners in creating or using these AI technologies should not be discouraged from doing it. At the same time, we need the smaller players to have equal chances to get access to these technologies and use them in an efficient way to deliver a better output and achieve successful market outcomes. That should be the objective of policy tools and potential regulations.

How do you balance the integration of AI with concerns over automation?

GP: One of the biggest concerns has to do with the future of work. In order to make the new AI-enabled production model succeed, we need a participative approach where automation and AI technologies are introduced only in complementary ways with human work. We don’t need to automate everything because this does not guarantee that we will have superior results than a model in which we have a complementary function of humans and AI. Even Elon Musk has admitted that the excessive automation in Tesla was a mistake. We should not automate just to automate. That should be an important lesson for corporations as they move forward in this digital transformation and try to innovate, make their production processes more efficient, and produce higher quality products and services. Humans are still very valuable in this process.

In the age of generative and agentic AI systems, we need to make sure that human judgment and opinion are freely shaped and they are not manipulated by these systems. We created AI to serve humanity. Manipulation of human behavior is a great risk and we should develop some safeguards to ensure that it is the human judgment and opinion that prevail when we use these systems to reach some decisions — especially, since AI hallucinations are not uncommon.

RELATED

How to Talk with Your Hands

New research led by USC Marshall’s Luca Cascio Rizzo reveals that gestures that visually represent what speakers say help audiences perceive them as more competent and persuasive.

Cited: Richard Sloan in Bloomberg Tax

Sloan’s research shows that financial auditing and accounting cases usually decline during the first year of a new presidential administration as new division directors are hired.

Interview: Paul Orlando in Beta Boom

Orlando explains how he and the Greif Incubator support founders at USC Marshall.

Marshall Faculty Publications, Awards, and Honors: November 2025

We are proud to highlight the many accomplishments of Marshall’s exceptional faculty recognized for recently accepted and published research and achievements in their field.

Quoted: Nikhil Malik in Business Insider

Malik’s research shows that employees perceived to be attractive may earn a higher annual salary.